The power of choice is the bedrock to every functional, equitable ecosystem; where all participants can make informed decisions for themselves. Nothing can replace it.

Every day, people are offered false dilemmas designed to strip them of their choice. They are forced to “choose” between accepting lobsided terms of service for the products and services they need or being excluded altogether. My Data Union was created to help people reclaim their choice in a marketplace that habitually disregards it. Let’s explore how to identify false dilemmas, the spectrum of choices people do not realize they are missing, and what people can do about it.

The purpose of creating a false dilemma is to manipulate the chooser into believing the only viable options are what they are presented. Real choice is not an all-or-nothing decision and presenting it as such is a time-tested way to deceive someone into doing something they probably do not want to do. Parents play the false dilemma game all the time to quell the mini-rebellions conducted by their children. Does this exchange sound familiar?

Dad: “It’s late and time for you to go to bed.”

Son: “But I don’t wanna! You are so mean to me! I won’t go willingly!”

Dad: “Fine. Do you want to go to bed now or after you’ve cleaned your room?”

Son: “Wow it’s so late! I’m going to bed.”

Creating false dilemmas in the household is an acceptable battle tactic between parent and child. Dad knows his son needs to go to bed but getting him there requires Dad’s finesse to avoid confrontation. Dad’s proposition of “go to bed now” or “after you’ve cleaned your room” intentionally skips other permutations that Dad does not want to entertain.

Outside of the household, false dilemma tactics are a form of gaslighting, unfriendly and unprofessional. Consent happens with nuance between people and businesses that respect a mutually benefitting relationship, and who welcome difficult discussions. Unfortunately, people’s choice and consent are routinely stripped away, forcing consumers to surrender their digital property to middlemen who control and monetize it instead.

To thwart those who conduct business through false dilemma and other abusive tactics, we must first know how to recognize it and understand what other options exist. The following passage illustrates how an average consumer, Brianna, is stripped of her choice and what options she is missing.

Brianna uses App A, a popular mobile app that demands access to her device’s location via WiFi, Bluetooth, and/or GPS coordinates. While in use, Brianna’s location data is sent into a black box that is exclusively controlled by App A, providing no transparency to Brianna. Depending on Brianna’s device and her settings, App A also collects her location data in the background. Some of her location data is used to make the service smoother for Brianna, but most of it is sold by the data aggregator to data brokers for a quick buck. Brianna has no way of establishing what data can be collected, understanding what it is worth, nor controlling who can access it.

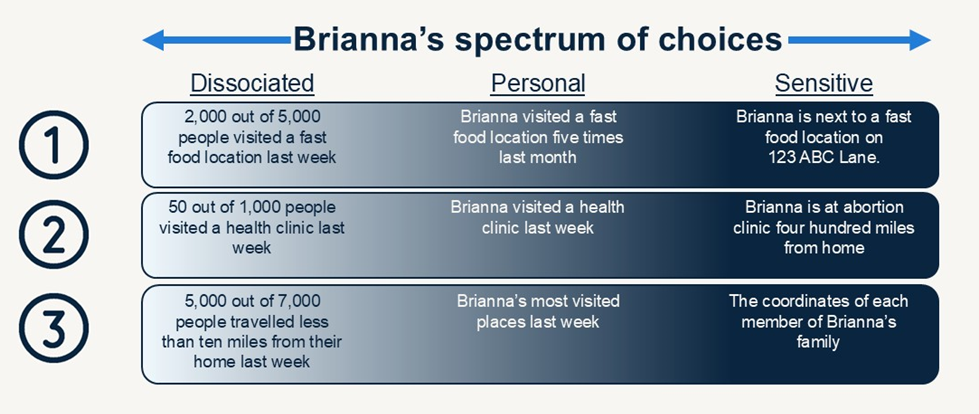

Let’s assume App A only collected Brianna’s location data (an unrealistic assumption but one that makes a point). After a short while, the app’s developer still holds a lot of valuable and potentially invasive data about Brianna. The data ranges from dissociated to sensitive to Brianna and she has no ability to limit: A) what data is sold to third-parties, such as data brokers; nor B) what information should be kept private.

As we can see, a lot of information can be learned just by collecting location data. “Allow” and “do not allow” options do not reflect the nuance of how the data can be used nor which companies should have access. For instance, Brianna may not care that fast-food brands can see her sensitive location data as she approached 123 ABC Lane and welcomes the opportunity to sell them the data. Brianna may be comfortable with selling dissociated data that reveals she and 49 other people in an anonymous data lake of 1,000 consumers visited a health clinic last week, but reluctant to sell location data to foreign-registered data brokers that reveals where she lives and that she recently visited an abortion clinic.[1] Similarly, Brianna could be comfortable monetizing dissociated or personal information about some of the places she visited most last week, but uncomfortable when her family’s safety and location-sharing app sells her family’s location data to data brokers that want to assemble her family’s identity puzzles.[2]

Brianna is the rightful owner and operator of her data. Her data sensitivity is subjective and how it is distributed disproportionately impacts her. Therefore, Brianna must be provided with the opportunity to control, monetize, and protect her data however she sees fit. Companies whose business models conflict with Brianna’s best interests should not be able to make such decisions for her. Similarly, the market needs a viable method to help treat Brianna fairly.

My Data Union is a collective of consumers that want to reshape the data economy and assert control over their property. They recognize the all-or-nothing, black-box approach companies use to collect their data is unacceptable and are organizing with others who feel the same way. My Data Union’s purpose is to provide its collective with the tools to control what data is revealed about them, education about how different companies utilize it, and the ability to decide where on the spectrum of choice they wish to participate.

Organized, informed consumers create significant negotiating leverage, and My Data Union can apply that leverage to demand for better treatment of its members by negotiating for equitable, transparent terms of service agreements with the services its members use most. Finally, members also gain the ability to directly access the consumer data market, whereby members can safely and consensually monetize their data in a way that is comfortable to them. Whether someone wants to offer all their data for sale, none at all, or something in between, My Data Union’s purpose is to provide members with the platform to make informed decisions for themselves and enforce their preferences in the marketplace. Every new member increases the collective’s strength, so if this mission appeals to you, sign up below.

[1] https://www.vice.com/en/article/location-data-firm-heat-maps-planned-parenthood-abortion-clinics-placer-ai

[2] https://thecapitolforum.com/life360-family-safety-app-selling-datasets-based-on-users-personal-information-2